At work, we use git, and git supports hooks, including pre-commit hooks. Rather than write my own, and do it poorly, I’m using a tool called pre-commit, created by engineers at Yelp.com. To them, I offer my thanks.

ip and ss: better than ifconfig and netstat

I’ve been using Linux for a while now, so typing certain commands is fairly ingrained, like ‘ifconfig’ and ‘netstat’. I know about “ip addr”, which is more modern than ifconfig, and I use it sometimes.

This week, I learned about ‘ss’, which is faster than ‘netstat’, and does more. My favorite invocation is “ss -tlp” to show programs listening on tcp sockets.

Ubuntu, ecryptfs, and changing password

I changed my password on my Ubuntu system this week, and then found that I couldn’t log in, except on a virtual terminal.

My home directory is encrypted, and apparently, it’s better to change a password using the graphical utilities, rather than the command line utilities. The following article was quite helpful in recovering:

http://askubuntu.com/questions/281491/cant-log-in-after-password-change-ecryptfs

OpenWest notes

This past weekend, I attended the excellent #OpenWest conference, and I presented Scaling RabbitMQ.

The volunteers that organized the conference deserve a huge amount of thanks. I can’t imagine how much work it was. I should also thank the conference sponsors.

A local group of hardware engineers designed an amazing conference badge, built from a circuit board. They deserve a big “high-five”. There was a soldering lab where I soldered surface mount components for the first time in my life – holding the components in place with tweezers. I bought the add-on kit for $35 that included a color LCD screen and Parallax Propeller chip. It took me 45 minutes to do the base kit, and two hours to do the add-on kit. I breathed a sigh of relief when I turned on the power, and it all worked.

The speakers did a great job, and I appreciate the hours they spent preparing. I wish I could have attended more of the sessions.

Among others, I attended sessions on C++11, Rust, Go lang, Erlang, MongoDB schema design, .NET core, wireless security, Salt Stack, and digital privacy.

I’m going to keep my eye on Rust, want to learn and use Go, and use the new beacon feature of Salt Stack. Sometime in the future, I’d like to use the new features of C++11.

The conference was an excellent place to have useful side-conversations with vendors, speakers, and past colleagues. It was a great experience.

Linux, time and the year 2038

Software tends to live longer than we expect, as do embedded devices running Linux. Those that want to accurately handle time through the year 2038 and beyond will need to be updated.

Fifteen years after Y2K, Linux kernel developers continue to refine support for time values that will get us past 2038. Jonathan Corbet, editor of LWN.net, explains the recent work in his typical lucid style: https://lwn.net/Articles/643234

It sounds like ext3 and NFSv3 filesystems will need to go the way of the dodo, due to lack of support for 64 bit time values, while XFS developers are working on adding support to get us past 2038. By that time, many of us will have moved on to newer file systems.

One comment linked to this useful bit of information on time programming on Linux systems: http://www.catb.org/esr/time-programming/, the summary is:

To stay out of trouble, convert dates to Unix UTC on input, do all your calculations in that, and convert back to localtime as rare as possible. This reduces your odds of introducing a misconversion and spurious timezone skew.

It’s also excellent advice for any back-end system that deals with data stored from devices that span a continent or the world, although it doesn’t necessarily eliminate daylight savings bugs.

Containerization – the beginning of a long journey

I read this today, and thought it’s worth sharing:

“The impact of containerization in redefining the enterprise OS is still vastly underestimated by most; it is a departure from the traditional model of a single-instance, monolithic, UNIX user space in favor of a multi-instance, multi-version environment using containers and aggregate packaging. We are talking about nothing less than changing some of the core paradigms on which the software industry has been working for the last 20 – if not 40 – years.”

And yet it is tempered with reality:

“…we are really only at the beginning of a long journey…”

http://rhelblog.redhat.com/2015/05/05/rkt-appc-and-docker-a-take-on-the-linux-container-upstream/

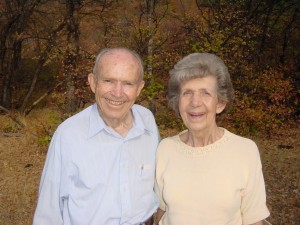

In memorium of Artha Robinson

My last remaining grandparent, Artha Robinson, passed away last Saturday. I’m glad to have known her and my grandfather well, to have been able to visit with them and laugh together. I will miss grandma, although I’m glad that she is free from physical pain. As my grandfather often said, “growing old is not for the faint of heart.”

Grandma always expressed interest in her grandchildren and great grandchildren. She cared deeply for others and would go out of her way to help them. During the past few years, she read books to one of her neighbors who had lost her eyesight.

I remember eating with my grandparents, and finding out that dinner didn’t just include one vegetable — it included two or three, because eating plenty of vegetables is a healthy habit. Meals included a myriad of vitamin supplements, because they wanted to live good and long lives. Grandpa liked to do the grocery shopping, and they’d prepare meals together.

Grandma would jump on her indoor exercise trampoline and do aerobics. Grandpa gardened, and went walking.

They did their own taxes, and every year when April 15th rolled around, the kitchen table would be filled with papers and folders, and they’d file for an extension so they could finish.

It seemed that even when grandma felt terrible, she had her hair done beautifully.

Grandma was the hub of our extended family, and now that she’s gone, it will be interesting to see how well we stay in touch. Perhaps Facebook will help that process along, but it’s not as good at forming relationships and renewing friendships as getting together, face-to-face.

Ubuntu and .local hostnames in a corporate network

In the past, I’ve had trouble getting my Ubuntu machine to resolve the .local hostnames at work. I didn’t know why Ubuntu had this problem while other machines did not.

When I did a DNS lookup, it failed, and ping of host.something.local failed. Yet ping of the hostname without the .something.local extension worked. Odd. I googled various terms, but nothing useful came up. I tried watching the DNS lookup with tcpdump, but it didn’t capture anything.

Eventually, I thought of using ‘strace ping host.something.local’ to see what was happening, and it turns out that DNS was never being queried — it was talking to something called avahi.

I googled “avahi”, and was reminded that hostname resolution is configured in /etc/nsswitch.conf. In the case of Ubuntu, it’s configured to send *.local requests to Avahi (mdns4_minimal), and no further — i.e. if Avahi doesn’t resolve it, it doesn’t try DNS.

In my case, I want corporate DNS to resolve .local addresses. So I changed my /etc/nsswitch.conf from this:

hosts: files mdns4_minimal [NOTFOUND=return] wins dns mdns4

to this:

hosts: files wins dns mdns4_minimal mdns4

And now my Ubuntu development machine can communicate with our internal .local machines without having to resort to using IP addresses, short names, or having to place the mapping in /etc/hosts.

When your USB devices can be used against you

Interesting: “about half of your devices, including chargers, storage, cameras, CD-ROM drives, SD card adapters, keyboards, mice, phones, and so on, are all likely to be proven easily reprogrammable and trivially used to… attack software. Unfortunately, the only current solution on the horizon is to not share any USB devices between computers.” — Dragos Ruiu

Grepping archived, rotated log files — in order

Say you’ve got the following log files with the oldest entries in myapi.log.3.gz:

myapi.log.1.gz

myapi.log.2.gz

myapi.log.3.gz

If you want to ‘grep’ them for a string, in order of date, oldest to newest, there’s no need to extract them one at a time, and there’s no need to concatenate the files first. Use sort to put the files in the proper order, and zgrep to search though the compressed files.

Here’s how to order the file list:

ls myapi.log.*.gz | sort -nr -t . -k 3,3

Here’s how to ‘zgrep’ them in the proper order:

ls myapi.log.*.gz | sort -nr -t . -k 3,3 | xargs zgrep “404”